Plato Systems: Radar-Camera Perception for Industrial Automation

Calibrating & Annotating Radar-Camera Detection & Tracking Data using Real-Time Kinematic GPS

February-October 2021

TLDR: Plato Systems (RTK GPS Calibration & Annotation of Radar-Camera Perception)

Problem: Radar-based detection and tracking struggles with low-data and artifacts like multi-path reflections, and precise calibration between the radar, camera, and world coordinate systems is necessary for robust sensor fusion and localization.

Solution & Contribution: I built a high-volume annotation and cm-accurate localization pipeline using cm-accurate real-time kinematic GPS to calibrate, evaluate, and train Plato's mm-wave radar range-doppler-angle tracking system, transforming the product's localization ability and visualization in end-user deployments, furthering Plato’s mission to provide robust and beautiful localization and tracking to enterprise customers.

The basic premise:

Radar-camera sensor fusion provides an extremely robust, long-range detection and tracking system for security and industrial automation applications

However:

Camera-based detection and tracking is a mostly solved problem

Radar-based detection and tracking struggles with low-data and artifacts like multi-path reflections

Precise calibration between the sensing systems is necessary for robust sensor fusion and localization

The solution:

Use a high-accuracy ground truth localization system for calibration and annotation of the radar-camera perception module

Centimeter-Accuracy Localization with L1/L2 1MHz Carrier-Phase Enhancement

a.k.a. Real-Time Kinematic (RTK) GPS

After surveying a range of high-accuracy / high-precision localization methods from ultra-wideband (UWB) ranging to infrared localization to RFID tags, I settled upon real-time kinematic GPS because of its ability to localize any object (static or moving) with cm-accuracy at a low cost (~$100 per unit) outdoors nearly anywhere in the continental United States (as long as a RTCM-transmitting base station is within several miles of your location).

Using the centimeter accurate latitude, longitude, altitude, and GPS doppler velocity readings from the real-time kinematic GPS system (alongside a prior RTK GPS measurement of the position and yaw orientation of the radar-camera perception unit), I was able to store 10Hz cm-accurate range, doppler velocity, and yaw angle measurements of a tracked object in the radar ground plane coordinate system, which alongside a simple 2-dimensional Kalman filter allowed us to directly annotate mm-wave radar range-doppler-angle data.

Using tracking results from our vision detection and tracking system (or manual clicking of the antenna’s position moving along the camera frame on a time-synchronized* feed), I also computed an extremely accurate homography transform from our vision system to the radar ground plane, which allowed us to build far more robust sensor fusion-based detection and tracking from both vision and radar tracking results, leveraging the strengths and weaknesses from both systems in an Unscented Kalman Filter to synthesize a high precision and recall detection and tracking output of people, cars, bikes, and more.

Note: millisecond-level time synchronization was afforded by a separate GPS unit attached to the radar-camera perception unit CPU which periodically set the system time to be synchronized with all RTK GPS measurements.

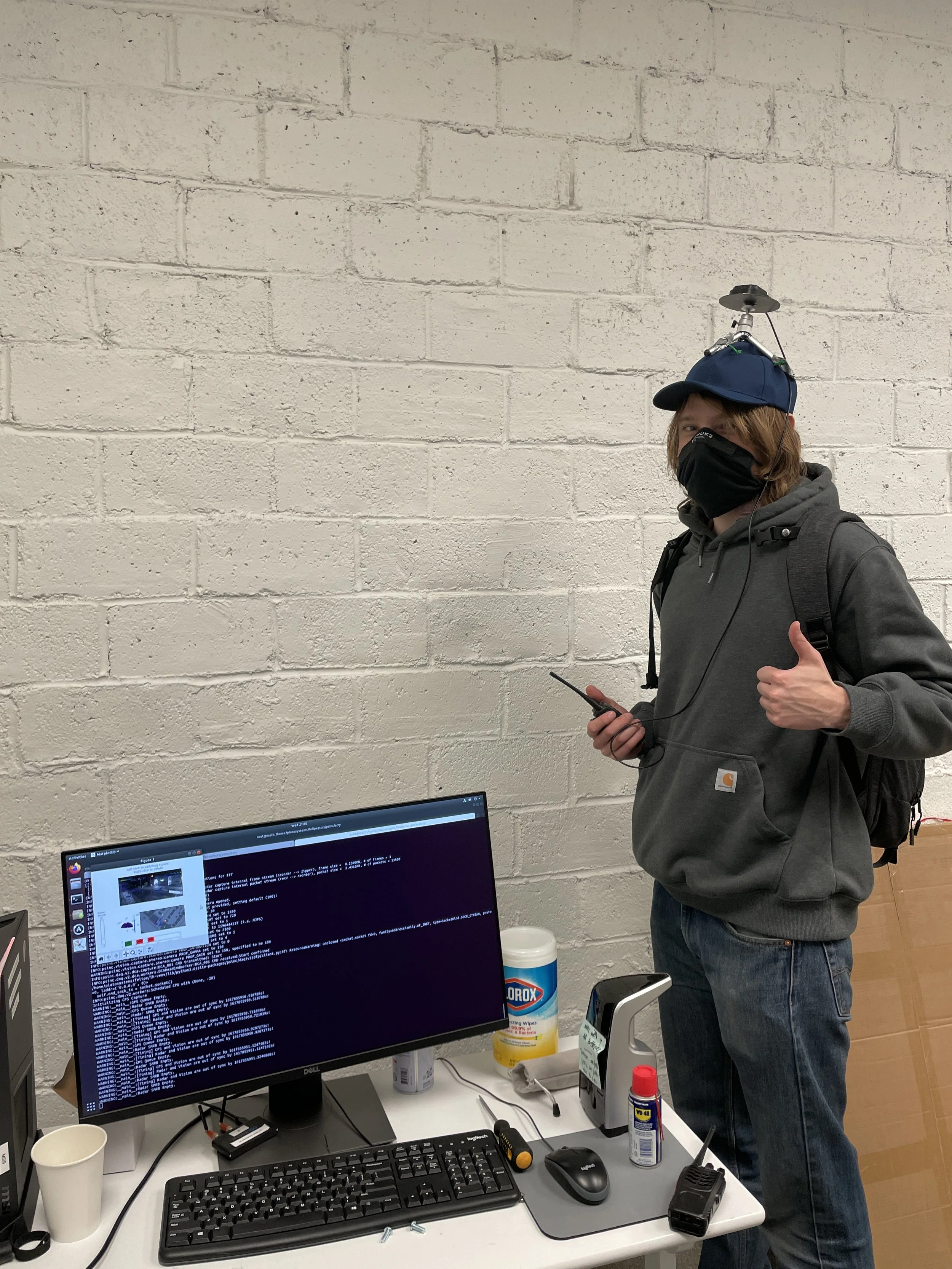

Left: Multi-band L1/L2 antenna mounted on a person (handheld radio for communicating verbal instructions) attached to a U-Blox ZED-F9P high-precision GPS chip via an SMA cable, receiving RTCM-corrections and transmitting corresponding RTK GPS position data over a 500 mW 915MHz radio link.

Right: Video showing first use of the radar-vision-RTKGPS calibration tool I built to compute a radar-vision-world transform (specifically a translation and yaw rotation between radar ground plane and world, and a homography transformation between the radar ground plane and the camera pixel coordinate system) by clicking on the object/person of interest and building a set of associations between ground truth GPS points and camera pixels, and solving the Perspective-n-Point problem to compute a homography (note: at this point there was a slight delay in GPS data radio transmission and live visualization, this was later fixed).

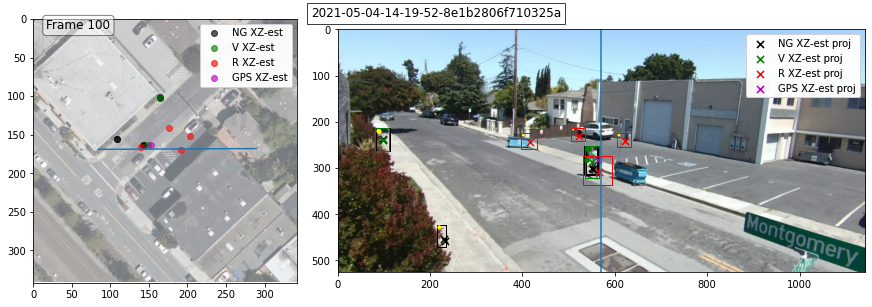

Figure showing the reprojection error in each coordinate system (left: UTM world coordinate system / radar ground plane in meters, right: camera pixel coordinate system) after RTK GPS calibration.

Using RTK GPS to Calibrate and Annotate Radar-Vision Detection and Tracking

Figure showing RTK GPS annotation in the ground plane and pixel coordinate systems. This system allowed us to build high volume annotations of radar and vision detection and tracking models in any environment where we could attach the RTK system to cars, people, bikes, etc. This example shows a case where the radar detection & tracking system detected false positives caused by multi-path reflections (which were filtered out in the final output [black] by vision detection & tracking). The high-volume ground truth annotation system can and is being used to improve the radar detection & tracking further to filter out multi-path reflections without the aid of vision.

Video showing far more accurate detection and tracking of a person on both bird’s eye view (BEV) and in camera pixel coordinate system from improved radar-to-camera-to-world transform.

Figure showing basic system setup for cm-accurate carrier-phase enhancement / real-time kinematic GPS, requiring a base station seeing at least 5 common satellites communicating carrier-phase corrections to the rover being localized.

Figure showing multi-band L1/L2 antenna attached to a U-Blox ZED-F9P high-precision GPS chip via an SMA cable, receiving RTCM-corrections and transmitting corresponding RTK GPS position data over a 500 mW 915MHz radio link.